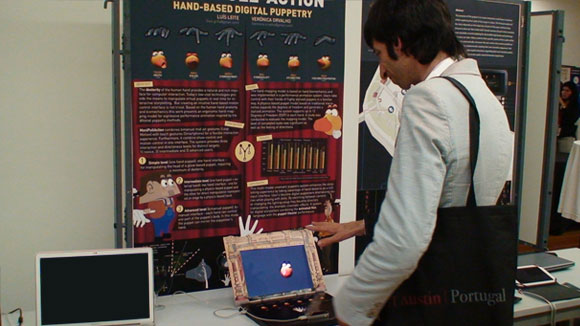

Virtual Marionette puppet tools were used to develop several interactive installations for the animation exhibition Animar 12 at the cinematic gallery Solar in Vila do Conde. The exhibition was debuted in the 18th of February of 2017.

Faz bem Falar de Amor

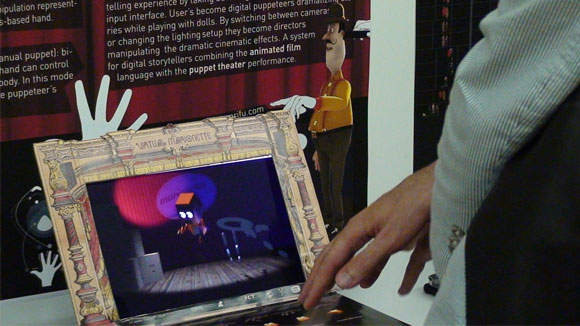

An interactive installation that challenges the participants to interpret with virtual characters some of the scenes from the animation music clip developed by Jorge Ribeiro for the music “Faz bem falar de amor” from Quinta do Bill.

Puppit tool was adapted to drive two cartoon characters (a very strong lady and a skinny young man) using the body motion of the visitors with with one Microsoft Kinect. The virtual character skeletons differ from the human body proportions. Thus, there are distinct behaviors on each puppet that do not mirror exactly the participant movement. Although our intention was to present this challenge to the participant for him to adapt his body to the target puppet we help him a little bit. To solve this discrepancy, I used two skeletons. A human like skeleton is mapped directly to the performer”s body. Then, the virtual character skeleton is mapped to the human clone skeleton with an offset and scale function. In this way, it was possible to scale up and down the movement on specific joints from the clone skeleton and make the virtual character behave more natural and cartoonish. This application was developed using Unity, OpenNI and Blender.

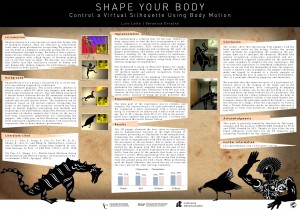

É Preciso que eu Diminua

This animation music clip was developed by Pedro Serrazina for Samuel Úria. It describes the story of a character that suffers from a scale problem transcending the size of the buildings. The character tries to shrink placing the arms and legs near to is body. On the other hand there is the need to free from the strict boundaries and expand all the things around him. To provide the feeling of body expansion pushing the boundaries away from his body the visitor drives a silhouette as a shadow with a contour line around him that grows when the participant expands his body and shrinks when he reduce his size. The silhouette captured by a Microsoft Kinect is projected on a set of cubes that deform the body shape. This application was developed with Openframeworks with ofxKinect and ofxOpenCV.

Estilhaços

For this short animation film produced by Jorge Miguel Ribeiro that addresses the portuguese colonial war there was the intention to trigger segments of the film when the visitor place his body above a mine. Two segments of the film show two distinct perspectives from the war, one from a father that experienced the war and the other from his child that understood the war through the indirect report of his father. A webcam was used to capture the position of the visitor”s body and whenever his body enters the mine space it sends an OSC message with a trigger to a video player application. Both video trigger and video player applications were developed with Openframeworks.