Poster for the Solitária performance

Solitária is a performance-play that explores the human Loneliness in the solitary confinement imagining the behavior of a prisoner through is mind and body. Our body is the only connection to the physical world, the only sensor capable to recognize the physical space. However, our sensorial capabilities begin to misbehave and soon we became disorientated.

Debut’s on 9th November 2017 – 21h30 – Blackbox d’O Espaço do Tempo.

Other performances in 10th and 11th November at 21h30 – Blackbox d’O Espaço do Tempo.

[embedyt] http://www.youtube.com/watch?v=BmSXnxXGOXk[/embedyt]

Solitária follows the performance-play approaches which characterizes the work developed by Alma d’arame from its beginning. Each work starts inside a delimited space, inside it’s own confinement. In one hand, the space of narrative, theater, puppet, being and object and, on the other, the space of programming, kinetics, multimedia. Starting from the solitary and creative space of each one, we saw the common space of creation born. We all have and need that time with ourselves. It is in this time that we find the space of each one, which is ours alone, and where we can relive memories, hide, think, feel, register. Here we will reach own states. That is what this performative act is all about.

It is in this laboratory space that this solitary confrontation between man and machine, between real and virtual, unfolds, and it is this confrontation that will lead us to experimentation and the search for new narratives.

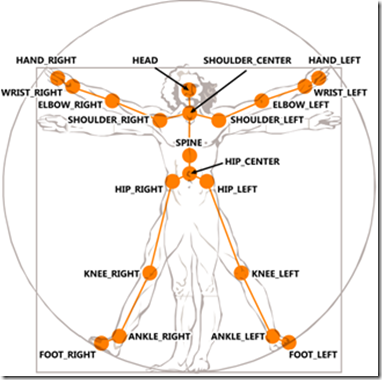

The kinetic movement of the body and how it occupies the empty space will build this visual and sound narrative.

Art team

Direcção artística | Amândio Anastácio;

Interpretação | Susana Nunes;

Multimédia | Luís Grifu;

Música | João Bastos;

Marioneta | Raul Constante Pereira;

Desenho de luz e Espaço cénico | Amândio Anastácio;

Desenho de luz e montagem | António Costa;

Direcção de Produção | Isabel Pinto Coelho;

Assistente de Produção | Alexandra Anastácio;

Fotografia | Inês Samina;

Vídeo | Pedro Grenha;

Production | Alma d’Arame

Apoio | Câmara Municipal de Montemor-o-Novo .

Parceria | Espaço do Tempo .

Estrutura Financiada por | Dgartes e Governo de Portugal