[vimeo 78027358 w=580&h=326]

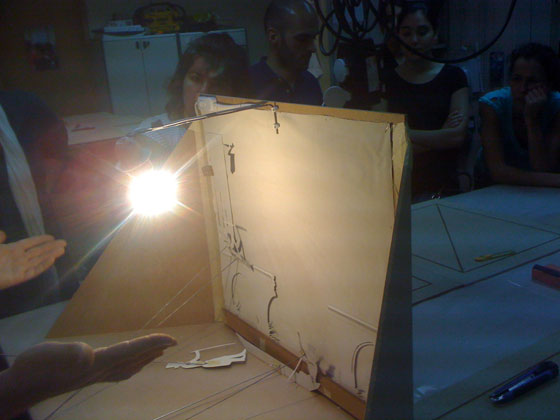

INVERSUS – artistic installation that explores interaction by using every-day objects

Why should a lamp be used only to illuminate ? … a speaker to play a sound ? ….or a fan to produce wind ?

This every-day objects are used commonly as transmission devices (light, sound and wind).

I knew, before starting this project, that a speaker could be used as a microphone.

My proposal was to find out if a transmission device could be used as a reception device for interaction purposes.

The Lamps (in this case: Leds) where used as light sensors, the speakers (in this case: piezzos) where used as pressure sensors and the fan was used as a blowing sensor.

The main concept of inversus was to invert the meaning of transmission devices into reception devices. A sensitive machine that capture human interaction to produce sound and visual kinetics. A performing instrument that gives life to a mechanic flower that spins when someone blows into the machine producing an animated shadow like in shadow puppetry. There is also a virtual marionette inside the machine that reacts to the pressure of the pads, this marionette is rigged with bones that are mapped to the pads that make them squash and stretch producing animation.

This instrument was made from a washing machine changing the meaning absorbing the spinning metaphor as a mixing machine. To extend the mixing metaphor i used a spinning wheel that creates a hypnotic effect.

Oct.2013

+ info at http://www.grifu.com/vm/?p=883#more-883

or http://www.virtualmarionette.grifu.com/