## Digital Hand Puppetry

ManiPullAction is a digital hand puppetry prototype built for a research project called Virtual Marionette. It was developed in March 2015 to evaluate the hand dexterity with the Leapmotion device to manipulate digital puppets in real-time. One year after its development maniPULLaction prototype is now released for Windows and OSX.

This prototype proposes an ergonomic mapping model to take advantage of the the full hand dexterity for expressive performance animation.

Try and evaluate

You can try the model and contribute with your evaluation by recording the 12 tests that are available. 8 tests with the puppet Luduvica and 4 tests with Cardboard boy puppet. If agree in making this test, just write a name in the label and start by pressing the “1” key or the “>” button. After the first test you can switch to the next by pressing the “>” button or the numeric keys “2”, “3”, and so on until the “8”. Then, you can jump to the next puppet by pressing the “2” button or the “Shift-F2” key. There are more 4 tests with this puppet that can be accessed with the “1”,”2”,”3”,”4” or using the “>” button. After finishing all tests you can send all the files that were saved to “virtual.marionette@grifu.com”. You can find or reproduce the file by pressing “browse”. The files are text files and motion capture binary files.

How to use

Just use your hand to drive the puppets. This prototype presents 4 distinct puppets with different interaction approaches that you can experiment by pressing 1,2,3,4 buttons.

- The palm of the hand drives the puppets position and orientation

- The little finger drives the eye direction (pupil) with 2 DOF (left and right)

- The ring finger and middle finger are mapped to the left and right eye brows with 1 DOF (up and down)

- The Index finger drives the eyelids with 1 DOF (open and close)

- The thumb finger is mapped to the mouth with 1 DOF (open and close)

There are other interaction methods with each puppet:

- Luduvica – a digital glove puppet. This yellow bird offers a simple manipulation by driving just the puppet””””””””””””””””s head. If you use a second hand, you can have two puppets for a dramatic play. The performance test (7 key) allows you to drive the puppet in the Z-deph.

- Cardboard Boy (paperboy) – a digital wireless marionette (or rod puppet). This physics-based puppet extends the interaction of Luduvica by introducing physics and a second hand for direct manipulation. As in traditional puppetry, you can play with the puppet with an external hand.

- Mr. Brown – is a digital marionette/rod. This physics-based puppet is a similar approach to the paperboy but with more expressions. A remote console interface allows to drive a full set of facial expressions and the second hand allows the digital puppeteer to drive the puppet””””””””””””””””s hands (although not available in this version)

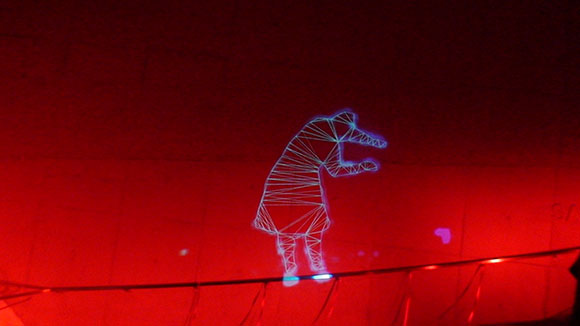

- Minster Monster – is a digital muppet with arm and hand. Facial and hands are the most expressive components of our bodies as well in monster characters. While the head of the puppet is driven by the palm of the hand, the mouth is controlled by a pinch gesture in a similar way to traditional muppets. A second hand drives the arm and hand of the monster for expressive animation.

Live cameras and lighting

To simulate a live show you can switch among cameras, change the lighting setup or even focus and defocus the camera.

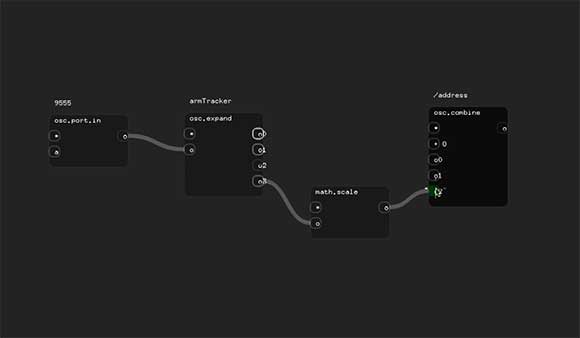

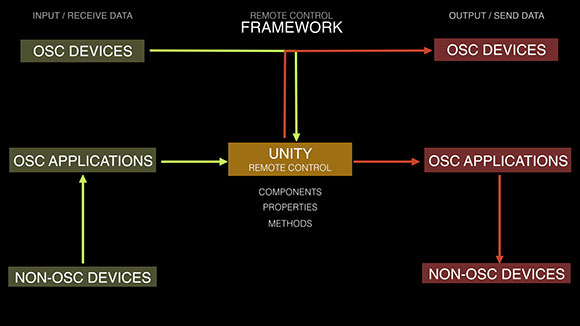

It also presents live camera switching and lighting control through remote OSC in port “7000” by using applications such as TouchOSC (try the“simple” preset)

.Switch between the 4 cameras with the messages “/2/push1” .. to “/2/push4”, to manipulate the lights use “/1/fader1” to “/1/fader4”, to focus and defocus the camera 3 on the Cardboard and Mr. Brown scenes use the “/1/fader5”. You can also navigate through scenes with the addresses “/2/push13” to “2/push16”.

Download

maniPULLaction for OSX

[wpdm_package id=”1716″]

maniPULLaction for Windows

[wpdm_package id=”1719″]

Info

Developed by Grifu in 2015 for the Digital Media PhD at FEUP (Austin | Portugal) supported by FCTIt requires the Leapmotion device.

Demo video

[vimeo 140126085 w=580&h=326]